Performance in Mandelbrot Set Computation

I want to have a nice poster of a Mandelbrot set, say 75×50 cm². At 300 DPI printing resolution, I need to compute a lot of pixels:

$$\frac{75}{2.54} \cdot \frac{50}{2.54} \cdot 300^2 = 52\,312\,605$$

The computation shall not take forever. When it takes 1000 nano seconds to compute one pixel, then it will take almost one minute for the whole image.

Computing a pixel in a Mandelbrot set is not hard. The set is defined on the complex plane $\mathbb C$ with an iterative procedure. Take the point $z_0 \in \mathbb C$. Then iterate:

$$z_{n+1} = z_n^2 + z_0 \,.$$

Sometimes one also calls $z_0 = c$ and write that equation as

$$z_{n+1} = z_n^2 + c \,.$$

The point $c$ belongs to the Mandelbrot set if that does not diverge for $n \to \infty$. The number $c = 1$ does not belong to the set, it diverges. However, $c = -1$ is contained in the set. The iteration will be $-1$, $0$, $-1$, and repeat itself. Once a number has $|z_n| > 2$, we know that it will diverge.

In order to create a pixel image, one maps the pixels to complex numbers. Then one iterates until $|z_n| > R$ for some $R \geq 2$. I have chosen $R = 5$ for my examples. Then I note the iteration number where $|z_n|$ exceeds the radius. That is the value that I note for the pixel. After 100 iterations, I stop. If it has not diverged, I put in the number 100.

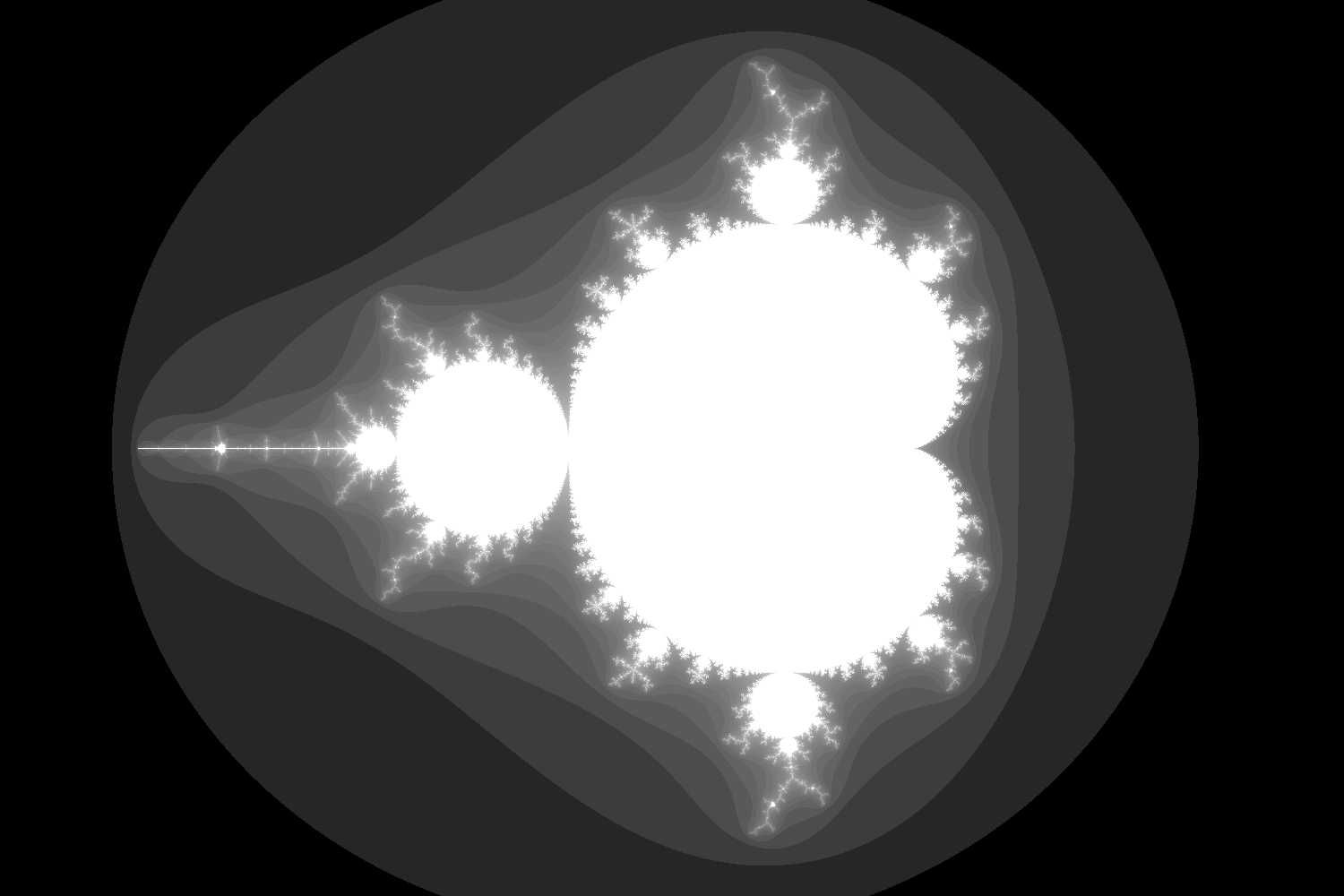

An image is created by taking the logarithm of the iteration number and spreading the values from black to white:

First Python Implementation

Implemententing this in Python is not that hard. I need two loops over the

height and width of the image. The numbers lx and ly give the number of

pixels in the directions. Then I have a minimum real and imaginary part and a

step size d.

for i in range(lx): for j in range(ly): x = re_min + i * d y = im_min + j * d c = x + 1.0j * y z = 0.0 + 0.0j for it in range(100): z = z**2 + c a = np.abs(z) if (a > radius): iters[i, j] = it break

There is some helper code around, but that is the main code. I stop iterating when it has diverged.

This simple Python implementation takes 59000 nano seconds per pixel on my laptop. This means that the whole poster will take around an hour to compute! That is doable, but can it be done faster?

NumPy Implementation

Python itself is not that fast. It is not really the language, more the implementation of the language. Either way, it just takes too long to compute the data for the poster.

The NumPy array implementation for Python is written in C and performs the internal loops in compiled C functions. So although the programmer does not have to deal with C, it still uses C below.

Writing the algorithm in Python with NumPy could look like this:

for i in range(100): z = z**2 + c a = np.abs(z) selection = (a >= radius) & (iters == 0) sel = np.where(selection) iters[sel] = i if selection.all(): break

The first two lines in the for loop are to be read as arrays. We take all the

values for $z$ and square them. Then we add the whole array of $c$ onto that.

The loops are now compiled C code. The following lines then look at the

elements where the absolute value exceeds the radius and where there are no

iterations written down. Then it writes down the current iteration number into

those fields.

The problem with this approch is that a lot of useless work is done. Even when some pixel already has its value, the complex multiplications are still done. This is a waste.

Still, this implementation only needs 2800 nano seconds per pixel. That is a speedup of 21, which is great! I just do not see any way to make it faster within Python, though.

First C/C++ Implementation

Using a native compiled language like C or C++ can make this much faster. For

this we take an array escape_iter which is linearized. Then we loop over

height and width and perform the same computation as before. The code looks as

follows:

for (size_t y = 0; y < ly; ++y) { for (size_t x = 0; x < lx; ++x) { double z_re = 0.0; double z_im = 0.0; for (size_t iter = 0; iter < max_iter; ++iter) { auto const new_z_re = z_re * z_re - z_im * z_im + c_re(x); auto const new_z_im = 2 * z_re * z_im + c_im(y); z_re = new_z_re; z_im = new_z_im; auto const abs = z_re * z_re + z_im * z_im; if (unlikely(abs >= radius)) { escape_iter[idx(x, y)] = iter; break; } } } }

Compiled with GCC using -O3, this will get us to 92 nano seconds per pixel!

In comparison with first Python version, that is now a factor 641 faster.

Adding Threads

From here it is relatively easy to distribute the pixels onto the various cores

of my CPU. Just adding a single OpenMP directive does the trick. The following

line gets added before the for loops:

#pragma omp parallel for collapse(2)

On my laptop, it now takes only 45 nano seconds per pixel. The speedup is already at 1311. This is a day-and-night difference to the first Python implementation!

SIMD Optimization

One can do slightly better than that. The current data layout is not really suited for using SIMD, the vector units in the CPU. My laptop CPU (Intel Sandy Bridge) supports the AVX instruction set and can do operations with four floating point numbers (double precision) at the same time. Since I want to do the same computation for each pixel, one should be able to make use of that. It could become up to four times as fast as before.

One caveat is that now all the code can be put into vector instructions. When doing so, one is doing more work than needed. A group of four pixels has to be iterated until the last one has diverged. In the beautiful parts of the sets, that could happen often. However, the other computations are virtually for free, so there is no harm in it.

Programming this is more cumbersome, though. One needs to introduce arrays that have the same size as the vector length. They also have to be aligned properly such that they can be loaded in one go. Then I need to do extra work and keep track of the finished pixels. All this adds some overhead to the code. The implementation is here:

#pragma omp parallel for collapse(2) for (size_t y = 0; y < ly; ++y) { for (size_t bx = 0; bx < blocks_x; ++bx) { alignas(veclen * sizeof(bool)) bool finished[veclen] = {false}; alignas(veclen * sizeof(bool)) bool written[veclen] = {false}; alignas(veclen * sizeof(double)) double z_re[veclen] = {0.0}; alignas(veclen * sizeof(double)) double z_im[veclen] = {0.0}; alignas(veclen * sizeof(double)) double new_z_re[veclen]; alignas(veclen * sizeof(double)) double new_z_im[veclen]; alignas(veclen * sizeof(double)) double c_re[veclen] = {0.0}; for (size_t v = 0; v < veclen; ++v) { c_re[v] = get_c_re(bx, v); } double c_im = get_c_im(y); for (size_t iter = 0; iter < max_iter; ++iter) { #pragma omp simd aligned(z_re, z_im, new_z_re, new_z_im, finished) for (size_t v = 0; v < veclen; ++v) { new_z_re[v] = z_re[v] * z_re[v] - z_im[v] * z_im[v] + c_re[v]; new_z_im[v] = 2 * z_re[v] * z_im[v] + c_im; z_re[v] = new_z_re[v]; z_im[v] = new_z_im[v]; auto const abs = z_re[v] * z_re[v] + z_im[v] * z_im[v]; if (!finished[v] && unlikely(abs > radius)) { finished[v] = true; escape_iter[idx(bx, y) * veclen + v] = iter; } } bool all_finished = true; for (size_t v = 0; v < veclen; ++v) { all_finished &= finished[v]; } if (all_finished) { break; } } } }

All in all it seems to be worth it. One pixel is down to 33 nano seconds. The overall speedup is 1787, which means that my poster only takes in the order of a minute to compute.

Conclusion

The Python version was the easiest to implement. It did not run very fast, though. Using a language that allows to get closer to the machine (like C or C++) exposes more opportunities to make it faster. If performance is top priority, then C or C++ give the tools to make it fast.

One should not neglect algorithmic efficiency, though. Choosing a more efficient algorithm usually gives greater improvements than tuning the performance of an implementation.

A summary of the various implementations:

| Implementation | Nano Seconds per Pixel | Speedup |

|---|---|---|

| Python simple | 59000 | 1 |

| Python NumPy | 2800 | 21 |

| C++ | 92 | 641 |

| OpenMP Threads | 45 | 1311 |

| SIMD Optimized | 33 | 1787 |

All the programs can be found in the repository: https://github.com/martin-ueding/mandelbrot-performance